Perceptron learning

From Rice Wiki

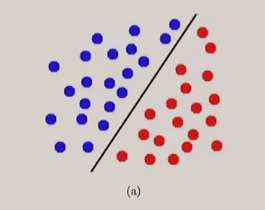

Perceptron learning is an algorithm for binary classification. It is good for classifying linearly separable data, as seen in figure 1. If the data is not linearly separable, more complex algorithms need to be used.

How it works

Initially, all attributes are passed into a neuron, each with a randomized weight.

The binary step activation function takes in z and outputs 1 or 0 depending on a threshold.

The error is computed

where the 1/2 is for simplicity of computation after the gradient. We use the gradient of the error function to update the weights of the model